Testing Fundamentals and Principles

February 15, 2021

In today’s session we were discussing common terminology and how it gets used in the workplace / technical definitions and a look at the Software Testing Lifecycle.

Common Language used in Testing

The aim here was to introduce us to commonly used terms and what they are generally agreed to encompass. Our session lead Beth highlighted that there is always some degree of variation and discussion around definitions, for example some may think an ‘bug’ can only happen in production, and any issues found in the code prior to production shouldn’t be defined as such. ISQTB has 5 different bug type distinctions, but the point of the definitions we are looking at are in-line with how these terms are used in real day-to-day life in a software testing team.

Bug

Something that isn’t working correctly, according to a set requirement.

Sometimes called failures faults or mistakes, most testers call the mismatch between expected and actual behaviour a bug.

Requirement

- Descriptions of the features and functionality of the target systems i.e. what the target system is trying to do?

- Requirements will normally come from the business but sometimes you may have to make your own. All the requirements combined should cover what this product needs to do.

Risk

- The possibility of a negative or undesirable outcome.

- You can manage risk, but you can’t realistically eliminate all risk and all bugs. Risk is something testers need to be acutely aware of and thinking about throughout all stages of the STLC.

- We need to stay aware that risk can impact different users to different extents and so something that is a minor inconvenience to a majority may actually pose far more of a problem to other users i.e. it is high-risk for the latter group.

Confirmation Testing

- Checking that a new part / feature works i.e. confirm that it does what it is supposed to do.

- Example: when adding a button that links to the checkout page, will clicking this button take you there?

Regression Testing

- Checking that a new feature does not impact on existing functionality.

Testers are well-positioned to carry our regression testing: Testers see the bigger picture of the software it fits into, whereas devs often only work on a part of the software e.g. front-end or back-end. So for example if a new back-end component breaks a front-end UI components because the API now fails. A tester can have the higher-level view of how things come together and how they can impact on each other, so could locate the issue more easily.

Negative Testing

- Checking something does not fail for an unexpected input. May require some out of the box thinking of how a user could try interact in a way that doesn’t match the expected response /route through the functionality.

Technical Debt

- Building up bugs that haven’t yet been resolved. These are sometimes tackled by teams in ‘bug bashes’ dedicating a period of time to resolve the backlog.

What are the different types of testing?

The testing types are different aspects of something you want to carve your testing efforts up into, like different sides of the same dice.

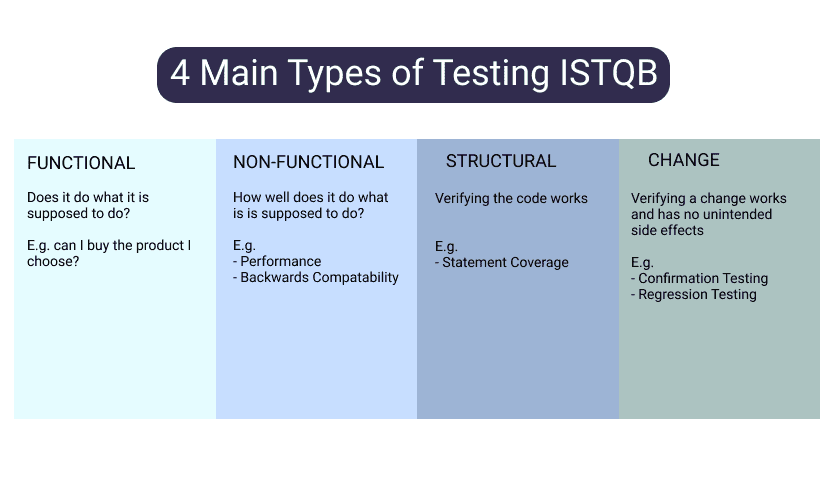

4 main types of testing come from ISTQB:

Further notes:

Backward compatability – does a new feature work with earlier / all release versions of the product? E.g. does a page load correctly on the last 10 versions of the chrome browser.

Structural – verifying that the code works! Checking the logical paths through the code, e.g. statement coverage and checking the different paths that result e.g. check the if Y path, then check the else if X path

Structural and Change could fit under functional and non-functional e.g. functional conformational testing and regression performance testing .

Types of testing is very much an area where ways of defining and dividing them into groups differ from individual to individual and company to company. This can be in what fall under each group, or using different concepts to (non)/fucntional, e.g. black/white/grey box testing.

What is the logical order of the test process?

The ‘logical order’ is known as the Software Testing LifeCycle (STLC)

If you wan to test something end-to-end, where do you start and how do you know when it’s done?

5 phases:

- Planning

- Design

- Execution

- Reporting

- Cl

1. Planning

During test planning we ensure we understand the goals and objectives of the customers stakeholders and the project

We need to know the risks which testing is intended to address

We can prioritise what test areas are most high-priority e.g. security, usability

Based on the understanding we gain, we can set goals and objective for testing deriving a test plan, approach and a mission that can give focus and structure to moving forward into designing tests.

This way you go about this will differ from workplace to workplace but typical tasks might include:

outlining your definition of what ‘done/good enough’ looks like when testing a feature

attending a sprint planning meeting (Agile Scrum methodology)

refinement sessions e.g. a ‘3 amigos session’ e.g. with a product owner who is the ‘voice’ of the customer, understands the business and the customer, with a dev, with a UX designer – all in a room to hammer out the whats and whys of the product – it’s a time to ask lots of questions

2. Design Phase

Creating our tests. This could be writing writing (automated) scripts. It could be setting out charters for an exploratory testing session, or instructions for a bug-bash, a set of high-level objectives.

Test analysis and design is the activity where general testing objectives get turned into tangible test conditions and designs. (ISTQB) Taking the general testing objectives identified in planning phase and build it up into test designs and test procedures - in short how you will test it

Can involve using software to create test artefacts

3. Execution

Implement and execute: Build an environment and run the tests

Environment Example: Pulling code into a test environment e.g. using Docker Images to spin up a new environment and seed with the test data

Alot of reworking goes into this stage to improve quality

SDET’s and DevOps in the team may actually handle this step in part or fully.

Dev’s might execute the tests and your role might be a ‘test coach’, advising those running the tests on the best practises that they can use. AKA Testing Coach /Advocate

Different areas you role might involve: working in a team where you have a specific role. e.g. back-end testing microservices, or front-end looking at UX, or specialise in something e.g. security testing, performance. Or maybe even a one man band who covers all areas!

DevOps environment – test execution tends to happen earlier in the cycle, with devs pair testing with a tester to improve the code. Or release changes into live on but toggled to hidden until testing confirmed as complete.

4. Reporting

- Evaluating if the results of testing has met the objectives

- Example: could be done through checking logs to see if the feature does the correct thing

- Assess the exit criteria – have we done enough testing?

Potential documents that may be written:

- A go or no-go release note

- Test exit report - what has / hasn’t been done – the current risks – more time needed for xyz, or we think it is covered. From this it is more likely to be a group decision on how to proceed, whereas ‘go or no-go’ can be perceived more as the tester being the gatekeeper of whether the quality is high enough for release.

5. Closure

- In practise it can overlap a lot with Reporting

- ISTQB – Closure is about collecting data from completed test activities to inform key stakeholders

- Looking from a big-picture level / high-level

- Finding improvements to the process of software overall (not including low-level which can differentiate from reporting)

Basic Test Techniques

Lastly we had a brief intro to the terminology of test techniques and how they are grouped. How can we structure test techniques into an order or groups that allow us to assess the best method?

We looked at Dynamic over Static. Dynamic testing is performed in an environment where the code is actually executed.

3 Types of Dynamic Testing technique classification:

Structure-Based

- Statement

- Decision -Condition(s)

Experience-Based

- Error guessing

- Exploratory testing

Specification-Based

- Boundary value analysis

- State Transition

- Equivalence partitioning

- Use Case Mapping

- State Transition

Final Challenges

Riskstorm one area for a vending machine- see the next blog post!

Research and break-down what one of the test technique terms mean

Use Case Mapping

The ‘use cases’ are the functionalities outlined in the requirements

Describing a particular use of the system and breaking down the steps that go into it

The steps follow the successful path or ‘happy path’, but an Extensions section lists any other scenarios that could occur (edge cases/errors). e.g. select a product in your size —> is it in or out of stock